library(tidyverse)

library(HistData)

set.seed(1983)

galton_heights <- GaltonFamilies |>

filter(gender == "male") |>

group_by(family) |>

sample_n(1) |>

ungroup() |>

select(father, childHeight) |>

rename(son = childHeight)20 Regression

20.1 Case study: is height hereditary?

Suppose we were asked to summarize the father and son data. Since both distributions are well approximated by the normal distribution, we could use the two averages and two standard deviations as summaries:

galton_heights |>

summarize(mean(father), sd(father), mean(son), sd(son))# A tibble: 1 × 4

`mean(father)` `sd(father)` `mean(son)` `sd(son)`

<dbl> <dbl> <dbl> <dbl>

1 69.1 2.55 69.2 2.71However, this summary fails to describe an important characteristic of the data: the trend that the taller the father, the taller the son.

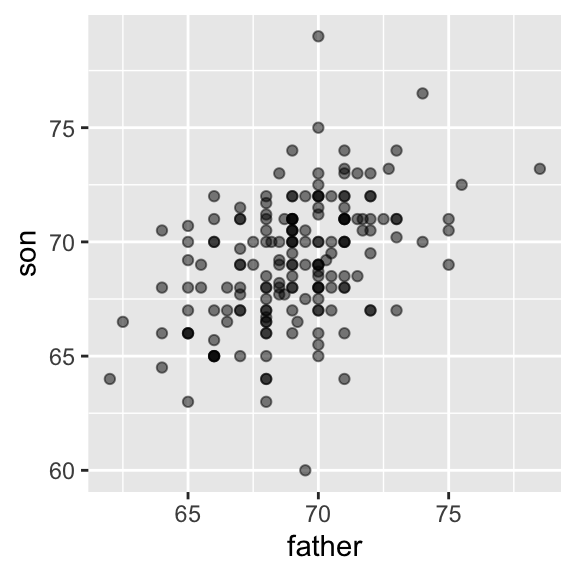

galton_heights |> ggplot(aes(father, son)) +

geom_point(alpha = 0.5)

20.2 The correlation coefficient

The correlation coefficient is defined for a list of pairs \((x_1, y_1), \dots, (x_n,y_n)\) as the average of the product of the standardized values:

\[ \rho = \frac{1}{n} \sum_{i=1}^n \left( \frac{x_i-\mu_x}{\sigma_x} \right)\left( \frac{y_i-\mu_y}{\sigma_y} \right) \]

rho <- mean(scale(x) * scale(y))Let’s see why this makes sense

The correlation coefficient is always between -1 and 1. We can show this mathematically: consider that we can’t have higher correlation than when we compare a list to itself (perfect correlation) and in this case the correlation is:

\[ \rho = \frac{1}{n} \sum_{i=1}^n \left( \frac{x_i-\mu_x}{\sigma_x} \right)^2 = \frac{1}{\sigma_x^2} \frac{1}{n} \sum_{i=1}^n \left( x_i-\mu_x \right)^2 = \frac{1}{\sigma_x^2} \sigma^2_x = 1 \]

A similar derivation, but with \(x\) and its exact opposite, proves the correlation has to be bigger or equal to -1.

For other pairs, the correlation is in between -1 and 1. The correlation, computed with the function cor, between father and son’s heights is about 0.5:

galton_heights |> summarize(r = cor(father, son)) |> pull(r)[1] 0.4334102For reasons similar to those explained in Section Section 19.2.3 for the standard deviation, cor(x,y) divides by length(x)-1 rather than length(x).

To see what data looks like for different values of \(\rho\), here are six examples of pairs with correlations ranging from -0.9 to 0.99:

20.2.1 Sample correlation is a random variable

Let’s consider our Galton heights to be the population and take random samples of size 25:

r <- sample_n(galton_heights, 25, replace = TRUE) |>

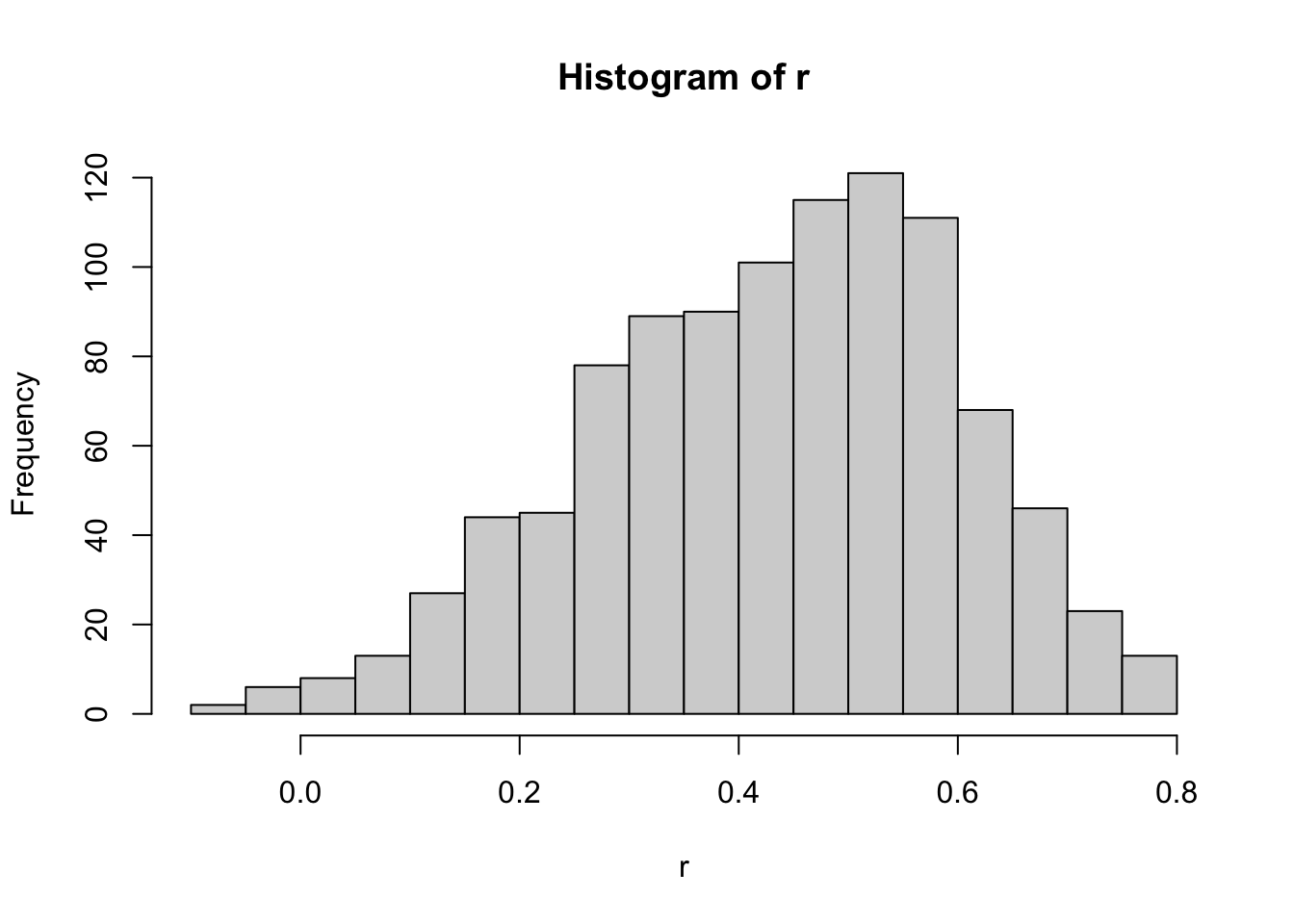

summarize(r = cor(father, son)) |> pull(r)`r`` is a random variable. We can run a Monte Carlo simulation to see its distribution:

B <- 1000

N <- 25

r <- replicate(B, {

sample_n(galton_heights, N, replace = TRUE) |>

summarize(r = cor(father, son)) |>

pull(r)

})

hist(r, breaks = 20)

We see that the expected value of `r`` is the population correlation:

mean(r)[1] 0.4316779and that it has a relatively high standard error relative to the range of values R can take:

sd(r)[1] 0.1652205So, when interpreting correlations, remember that correlations derived from samples are estimates containing uncertainty.

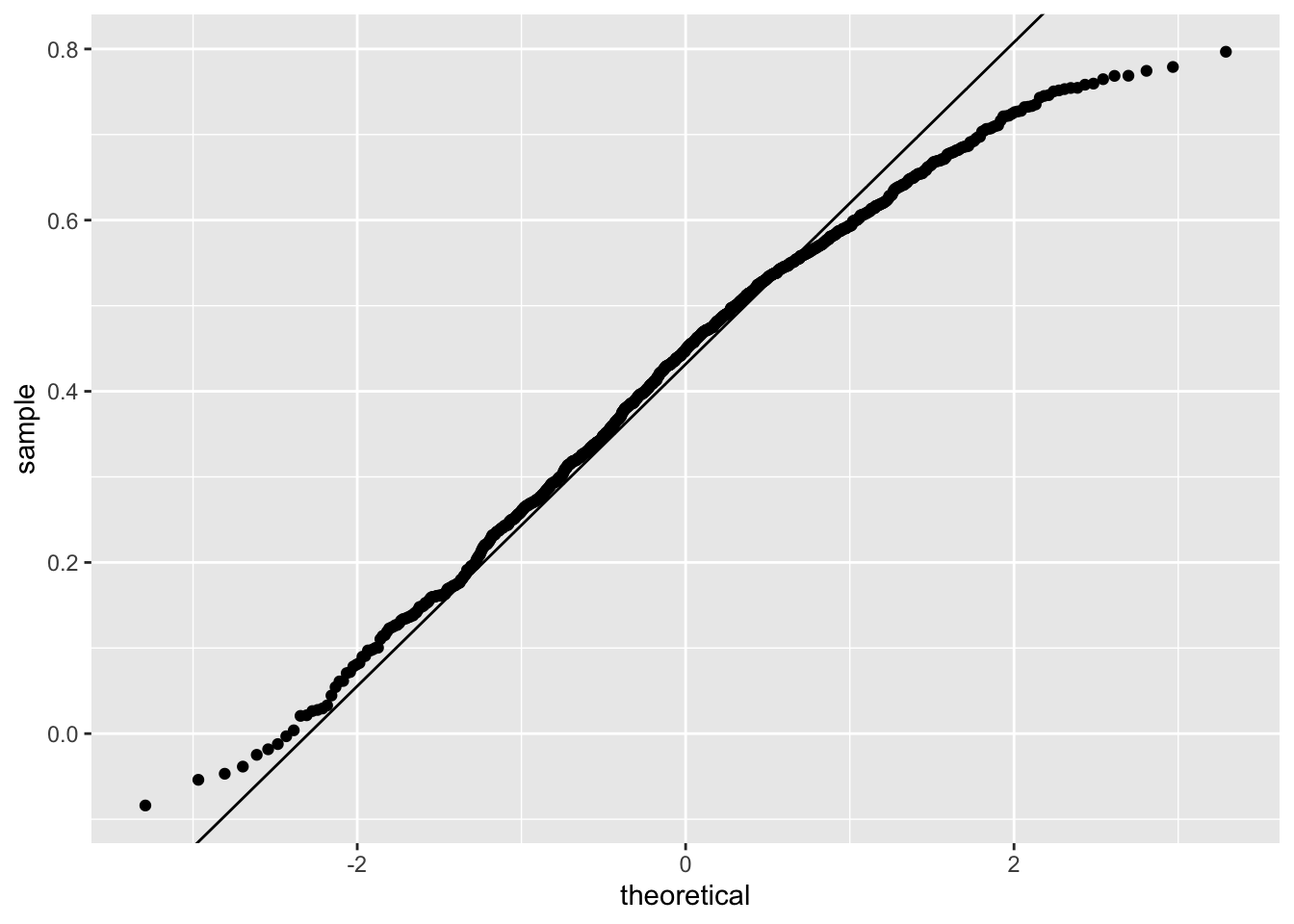

Does CLT apply?

The SE can be shown to be

\[ \sqrt{\frac{1-r^2}{N-2}} \]

In our example, \(N=25\) does not seem to be large enough to make the approximation a good one:

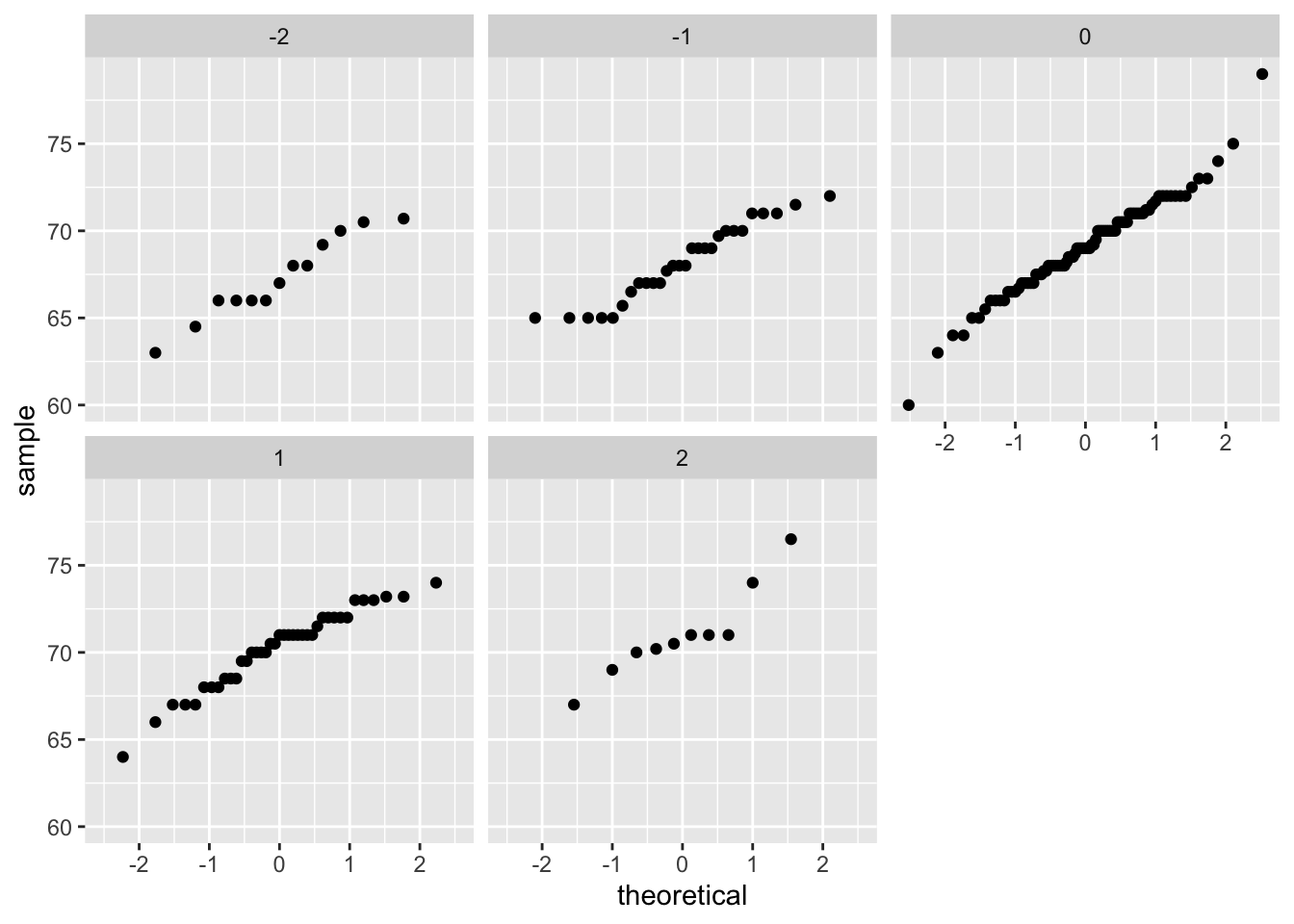

ggplot(aes(sample = r), data = data.frame(r)) +

stat_qq() +

geom_abline(intercept = mean(r), slope = sqrt((1 - mean(r)^2)/(N - 2)))

If you increase \(N\), you will see the distribution converging to normal.

B <- 1000

N <- 50

r <- replicate(B, {

sample_n(galton_heights, N, replace = TRUE) |>

summarize(r = cor(father, son)) |>

pull(r)

})

ggplot(aes(sample = r), data = data.frame(r)) +

stat_qq() +

geom_abline(intercept = mean(r), slope = sqrt((1 - mean(r)^2)/(N - 2)))

20.2.2 Correlation is not always a useful summary

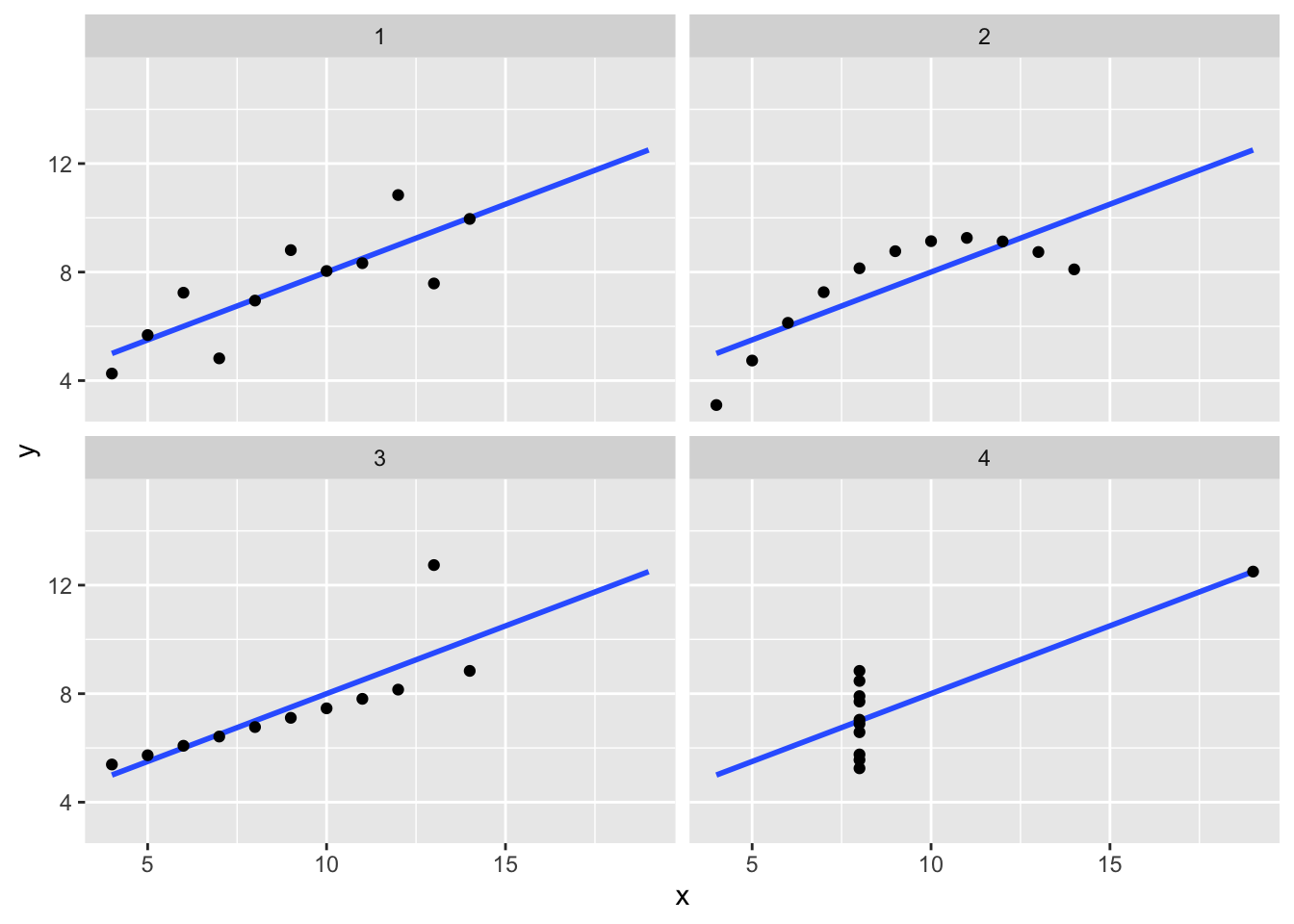

Correlation is not always a good summary of the relationship between two variables. The following four artificial datasets, referred to as Anscombe’s quartet, famously illustrate this point. All these pairs have a correlation of 0.82:

`geom_smooth()` using formula = 'y ~ x'

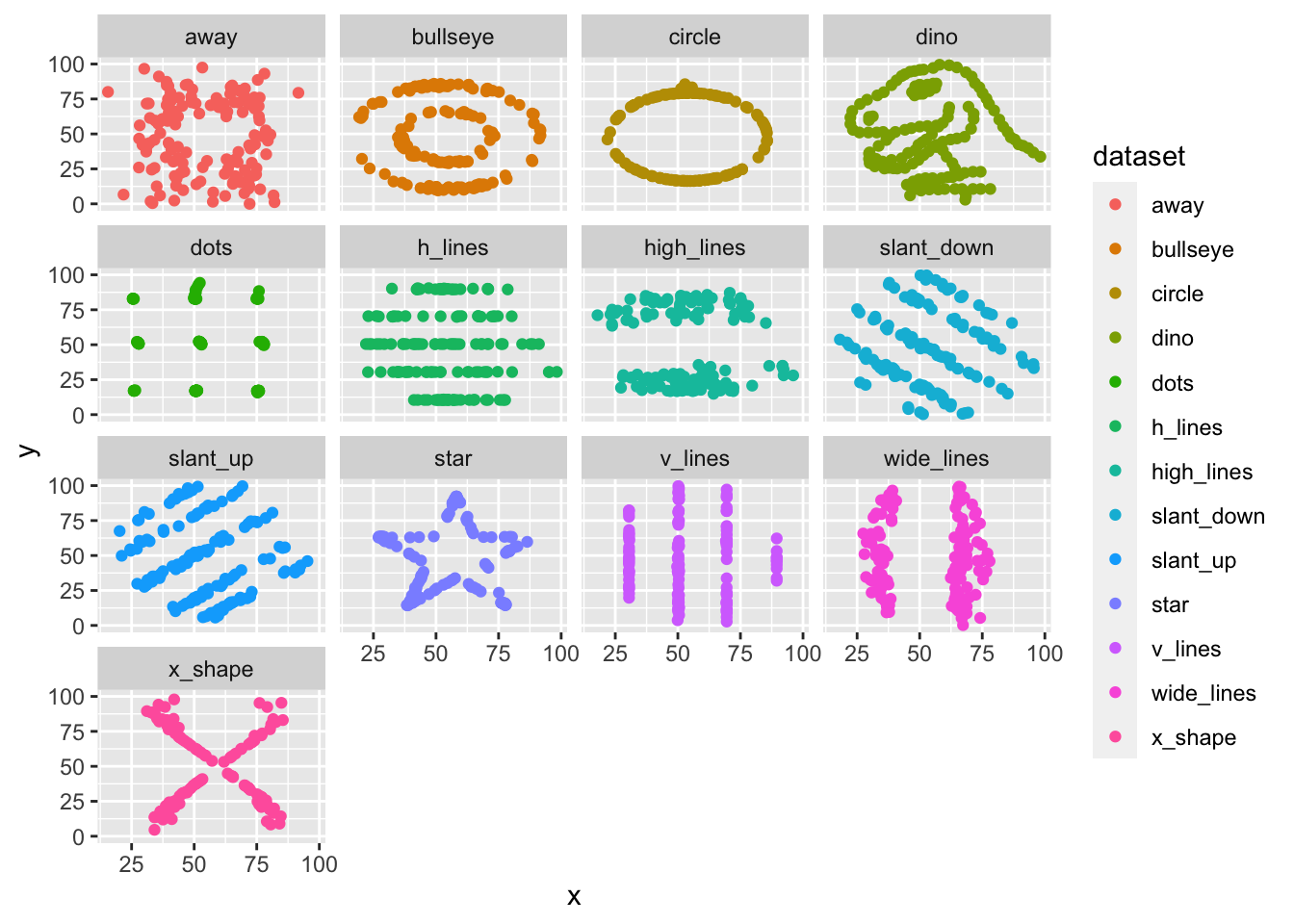

Here are some other fun examples:

library(datasauRus)

ggplot(datasaurus_dozen, aes(x = x, y = y, color = dataset)) +

geom_point() +

facet_wrap(~dataset)

Correlation is only meaningful in a particular context. To help us understand when it is that correlation is meaningful as a summary statistic, we will return to the example of predicting a son’s height using his father’s height. This will help motivate and define linear regression. We start by demonstrating how correlation can be useful for prediction.

20.3 Conditional expectations

Suppose we are asked to guess the height of a randomly selected son and we don’t know his father’s height. How do we do this?

Mathematically, we can show that the conditional expectation

\[ f(x) = \mbox{E}(Y \mid X = x) \]

minimizes the expected squared error (MSE):

\[ \mbox{E}[\{Y -f(X)\}^2] \]

with \(x\) representing the fixed value that defines that subset, for example 72 inches.

We denote the standard deviation of the strata with

\[ \mbox{SD}(Y \mid X = x) = \sqrt{\mbox{Var}(Y \mid X = x)} \]

Because the conditional expectation \(E(Y\mid X=x)\) is the best predictor for the random variable \(Y\) for an individual in the strata defined by \(X=x\), many data science challenges reduce to estimating this quantity. The conditional standard deviation quantifies the precision of the prediction.

However, we often have a limited number of points to estimate this conditional expectation. For example

sum(galton_heights$father == 72)[1] 8fathers that are exactly 72-inches. If we change the number to 72.5, we get even fewer data points:

sum(galton_heights$father == 72.5)[1] 1A practical way to improve these estimates of the conditional expectations, is to define strata of with similar values of \(x\). In our example, we can round father heights to the nearest inch and assume that they are all 72 inches. If we do this, we end up with the following prediction for the son of a father that is 72 inches tall:

conditional_avg <- galton_heights |>

filter(round(father) == 72) |>

summarize(avg = mean(son)) |>

pull(avg)

conditional_avg[1] 70.5Note that a 72-inch father is taller than average but smaller than 72. We see the same for someone slightly shorted than average:

conditional_avg <- galton_heights |>

filter(round(father) == 67) |>

summarize(avg = mean(son)) |>

pull(avg)

conditional_avg[1] 68.71818The sons of have regressed some to the average height. We notice that the reduction in how many SDs taller is about 0.5, which happens to be the correlation. As we will see in a later section, this is not a coincidence.

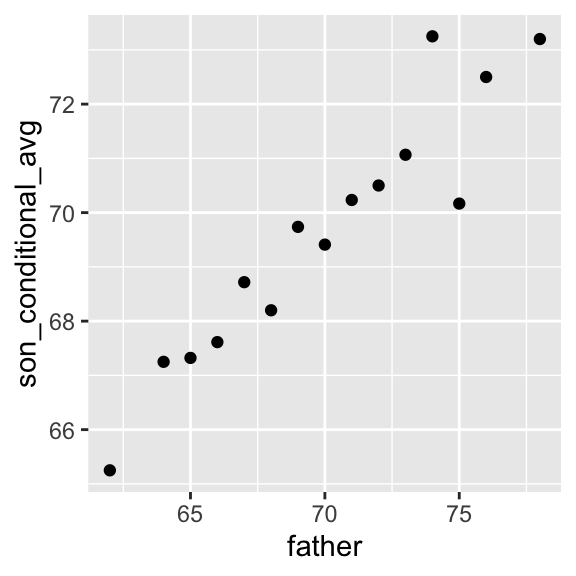

If we want to make a prediction of any height, not just 72, we could apply the same approach to each strata. Stratification followed by boxplots lets us see the distribution of each group:

galton_heights |>

mutate(father_strata = factor(round(father))) |>

ggplot(aes(father_strata, son)) +

geom_boxplot() +

geom_point()

Not surprisingly, the centers of the groups are increasing with height. Furthermore, these centers appear to follow a linear relationship. Below we plot the averages of each group. If we take into account that these averages are random variables with standard errors, the data is consistent with these points following a straight line:

The fact that these conditional averages follow a line is not a coincidence. In the next section, we explain that the line these averages follow is what we call the regression line, which improves the precision of our estimates. However, it is not always appropriate to estimate conditional expectations with the regression line so we also describe Galton’s theoretical justification for using the regression line.

20.4 The regression line

If we are predicting a random variable \(Y\) knowing the value of another \(X=x\) using a regression line, then we predict that for every standard deviation, \(\sigma_X\), that \(x\) increases above the average \(\mu_X\), our prediction \(\hat{Y}\) increase \(\rho\) standard deviations \(\sigma_Y\) above the average \(\mu_Y\) with \(\rho\) the correlation between \(X\) and \(Y\). The formula for the regression is therefore:

\[ \left( \frac{\hat{Y}-\mu_Y}{\sigma_Y} \right) = \rho \left( \frac{x-\mu_X}{\sigma_X} \right) \]

We can rewrite it like this:

\[ \hat{Y} = \mu_Y + \rho \left( \frac{x-\mu_X}{\sigma_X} \right) \sigma_Y \]

Note that if the correlation is positive and lower than 1, our prediction is closer, in standard units, to the average height than the value used to predict, \(x\), is to the average of the \(x\)s. This is why we call it regression: the son regresses to the average height. In fact, the title of Galton’s paper was: Regression toward mediocrity in hereditary stature. To add regression lines to plots, we will need the above formula in the form:

\[ \hat{Y} = b + mx \mbox{ with slope } m = \rho \frac{\sigma_y}{\sigma_x} \mbox{ and intercept } b=\mu_y - m \mu_x \]

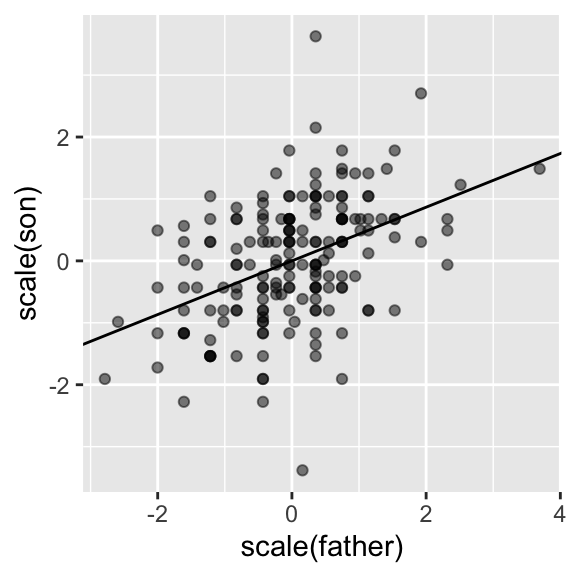

Here we add the regression line to the original data:

mu_x <- mean(galton_heights$father)

mu_y <- mean(galton_heights$son)

s_x <- sd(galton_heights$father)

s_y <- sd(galton_heights$son)

r <- cor(galton_heights$father, galton_heights$son)

galton_heights |>

ggplot(aes(father, son)) +

geom_point(alpha = 0.5) +

geom_abline(slope = r * s_y/s_x, intercept = mu_y - r * s_y/s_x * mu_x)

The regression formula implies that if we first standardize the variables, that is subtract the average and divide by the standard deviation, then the regression line has intercept 0 and slope equal to the correlation \(\rho\). You can make same plot, but using standard units like this:

galton_heights |>

ggplot(aes(scale(father), scale(son))) +

geom_point(alpha = 0.5) +

geom_abline(intercept = 0, slope = r)

20.5 Regression improves precision

Let’s compare the two approaches to prediction that we have presented:

- Round fathers’ heights to closest inch, stratify, and then take the average.

- Compute the regression line and use it to predict.

We use a Monte Carlo simulation sampling \(N=50\) families:

B <- 1000

N <- 50

set.seed(1983)

conditional_avg <- replicate(B, {

dat <- sample_n(galton_heights, N)

dat |> filter(round(father) == 72) |>

summarize(avg = mean(son)) |>

pull(avg)

})

regression_prediction <- replicate(B, {

dat <- sample_n(galton_heights, N)

mu_x <- mean(dat$father)

mu_y <- mean(dat$son)

s_x <- sd(dat$father)

s_y <- sd(dat$son)

r <- cor(dat$father, dat$son)

mu_y + r*(72 - mu_x)/s_x*s_y

})Although the expected value of these two random variables is about the same:

mean(conditional_avg, na.rm = TRUE)[1] 70.49368mean(regression_prediction)[1] 70.50941The standard error for the regression prediction is substantially smaller:

sd(conditional_avg, na.rm = TRUE)[1] 0.9635814sd(regression_prediction)[1] 0.4520833The regression line is therefore much more stable than the conditional mean. There is an intuitive reason for this. The conditional average is computed on a relatively small subset: the fathers that are about 72 inches tall. In fact, in some of the permutations we have no data, which is why we use na.rm=TRUE. The regression always uses all the data.

20.6 Bivariate normal distribution

Correlation and the regression slope are a widely used summary statistic, but they are often misused or misinterpreted. Anscombe’s examples provide over-simplified cases of dataset in which summarizing with correlation would be a mistake. But there are many more real-life examples.

The main way we motivate the use of correlation involves what is called the bivariate normal distribution.

When a pair of random variables is approximated by the bivariate normal distribution, scatterplots look like ovals. As we saw in Section Section 20.2), they can be thin (high correlation) or circle-shaped (no correlation.

A more technical way to define the bivariate normal distribution is the following: if \(X\) is a normally distributed random variable, \(Y\) is also a normally distributed random variable, and the conditional distribution of \(Y\) for any \(X=x\) is approximately normal, then the pair is approximately bivariate normal. When three or more variables have the property that each pair is bivariate normal, we say the variables follow a multivariate normal distribution or that they are jointly normal.

If we think the height data is well approximated by the bivariate normal distribution, then we should see the normal approximation hold for each strata. Here we stratify the son heights by the standardized father heights and see that the assumption appears to hold:

galton_heights |>

mutate(z_father = round((father - mean(father)) / sd(father))) |>

filter(z_father %in% -2:2) |>

ggplot() +

stat_qq(aes(sample = son)) +

facet_wrap( ~ z_father)

Now we come back to defining correlation. Galton used mathematical statistics to demonstrate that, when two variables follow a bivariate normal distribution, computing the regression line is equivalent to computing conditional expectations. We don’t show the derivation here, but we can show that under this assumption, for any given value of \(x\), the expected value of the \(Y\) in pairs for which \(X=x\) is:

\[ \mbox{E}(Y | X=x) = \mu_Y + \rho \frac{x-\mu_X}{\sigma_X}\sigma_Y \]

This is the regression line, with slope \[\rho \frac{\sigma_Y}{\sigma_X}\] and intercept \(\mu_y - m\mu_X\). It is equivalent to the regression equation we showed earlier which can be written like this:

\[ \frac{\mbox{E}(Y \mid X=x) - \mu_Y}{\sigma_Y} = \rho \frac{x-\mu_X}{\sigma_X} \]

This implies that, if our data is approximately bivariate, the regression line gives the conditional probability. Therefore, we can obtain a much more stable estimate of the conditional expectation by finding the regression line and using it to predict.

In summary, if our data is approximately bivariate, then the conditional expectation, the best prediction of \(Y\) given we know the value of \(X\), is given by the regression line.

20.7 Variance explained

The bivariate normal theory also tells us that the standard deviation of the conditional distribution described above is:

\[ \mbox{SD}(Y \mid X=x ) = \sigma_Y \sqrt{1-\rho^2} \]

To see why this is intuitive, notice that without conditioning, \(\mbox{SD}(Y) = \sigma_Y\), we are looking at the variability of all the sons. But once we condition, we are only looking at the variability of the sons with a tall, 72-inch, father. This group will all tend to be somewhat tall so the standard deviation is reduced.

Specifically, it is reduced to \(\sqrt{1-\rho^2} = \sqrt{1 - 0.25}\) = 0.87 of what it was originally. We could say that father heights “explain” 13% of the variability observed in son heights.

The statement “\(X\) explains such and such percent of the variability” is commonly used in academic papers. In this case, this percent actually refers to the variance (the SD squared). So if the data is bivariate normal, the variance is reduced by \(1-\rho^2\), so we say that \(X\) explains \(1- (1-\rho^2)=\rho^2\) (the correlation squared) of the variance.

But it is important to remember that the “variance explained” statement only makes sense when the data is approximated by a bivariate normal distribution.

20.8 There are two regression lines

We computed a regression line to predict the son’s height from father’s height. We used these calculations:

mu_x <- mean(galton_heights$father)

mu_y <- mean(galton_heights$son)

s_x <- sd(galton_heights$father)

s_y <- sd(galton_heights$son)

r <- cor(galton_heights$father, galton_heights$son)

m_1 <- r * s_y / s_x

b_1 <- mu_y - m_1*mu_xwhich gives us the function \(\mbox{E}(Y\mid X=x) =\) 37.3 + 0.46 \(x\).

What if we want to predict the father’s height based on the son’s? It is important to know that this is not determined by computing the inverse function: \(x = \{ \mbox{E}(Y\mid X=x) -\) 37.3 \(\} /\) 0.5.

We need to compute \(\mbox{E}(X \mid Y=y)\). Since the data is approximately bivariate normal, the theory described above tells us that this conditional expectation will follow a line with slope and intercept:

m_2 <- r * s_x / s_y

b_2 <- mu_x - m_2 * mu_ySo we get \(\mbox{E}(X \mid Y=y) =\) 40.9 + 0.41y. Again we see regression to the average: the prediction for the father is closer to the father average than the son heights \(y\) is to the son average.

Here is a plot showing the two regression lines, with blue for the predicting son heights with father heights and red for predicting father heights with son heights:

galton_heights |>

ggplot(aes(father, son)) +

geom_point(alpha = 0.5) +

geom_abline(intercept = b_1, slope = m_1, col = "blue") +

geom_abline(intercept = -b_2/m_2, slope = 1/m_2, col = "red")

20.9 Linear models

Let’s learn about the connection between regression and linear models.

If data is bivariate normal then the conditional expectations follow the regression line. This resul is derived, not assumed

In practice it is common to explicitly write down a model that describes the relationship between two or more variables using a linear model.

linear here does not refer to lines exclusively, but rather to the fact that the conditional expectation is a linear combination of known quantities. For example,

\[2 + 3x - 4y + 5z\]

is a linear combination of \(x\), \(y\), and \(z\).

- If we write

\[ Y = \beta_0 + \beta_1 x + \varepsilon \]

then if we assume \(\varepsilon\) follows a normal distribution with expected value 0 and fixed standard deviation, then \(Y\) has the same properties as the regression setup gave us.

In statistical textbooks, the \(\varepsilon\)s are referred to as “errors,” which originally represented measurement errors in the initial applications of these models. These errors were associated with inaccuracies in measuring height, weight, or distance. However, the term “error” is now used more broadly, even when the \(\varepsilon\)s do not necessarily signify an actual error. For instance, in the case of height, if someone is 2 inches taller than expected based on their parents’ height, those 2 inches should not be considered an error. Despite its lack of descriptive accuracy, the term “error” is employed to elucidate the unexplained variability in the model, unrelated to other included terms.

- Linear model for Galton’s data, we would denote the \(N\) observed father heights with \(x_1, \dots, x_n\), then we model the \(N\) son heights we are trying to predict with:

\[ Y_i = \beta_0 + \beta_1 x_i + \varepsilon_i, \, i=1,\dots,N. \]

*We can further assume that \(\varepsilon_i\) are independent from each other and all have the same standard deviation.

To have a useful model for prediction, we need \(\beta_0\) and \(\beta_1\). We estimate these from the data.

In practice, linear models are just assumed without necessarily assuming normality: the distribution of the \(\varepsilon\)s is not necessarily specified. Nevertheless, if your data is bivariate normal, the above linear model holds. If your data is not bivariate normal, then you will need to have other ways of justifying the model.

One reason linear models are popular is that they are interpretable.

In the case of Galton’s data, we can interpret the data like this: due to inherited genes, the son’s height prediction grows by \(\beta_1\) for each inch we increase the father’s height \(x\).

Because not all sons with fathers of height \(x\) are of equal height, we need the term \(\varepsilon\), which explains the remaining variability.

This remaining variability includes the mother’s genetic effect, environmental factors, and other biological randomness.

Given how we wrote the model above, the intercept \(\beta_0\) is not very interpretable as it is the predicted height of a son with a father with no height. Due to regression to the mean, the prediction will usually be a bit larger than 0.

To make the slope parameter more interpretable, we can rewrite the model slightly as:

\[ Y_i = \beta_0 + \beta_1 (x_i - \bar{x}) + \varepsilon_i, \, i=1,\dots,N \]

- In this case \(\beta_0\) represents the height when \(x_i = \bar{x}\), which is the height of the son of an average father.

20.10 Least Squares Estimates

The standard approach to estimate the \(\beta\)s is to find the values that minimize the distance of the fitted model to the data:

\[ RSS = \sum_{i=1}^n \left\{ y_i - \left(\beta_0 + \beta_1 x_i \right)\right\}^2 \]

This quantity is called the residual sum of squares (RSS).

Once we find the values that minimize the RSS, we will call the values the least squares estimates (LSE) and denote them with \(\hat{\beta}_0\) and \(\hat{\beta}_1\).

Let’s demonstrate this with the previously defined dataset:

library(HistData)

set.seed(1983)

galton_heights <- GaltonFamilies |>

filter(gender == "male") |>

group_by(family) |>

sample_n(1) |>

ungroup() |>

select(father, childHeight) |>

rename(son = childHeight)Let’s write a function that computes the RSS for any pair of values \(\beta_0\) and \(\beta_1\).

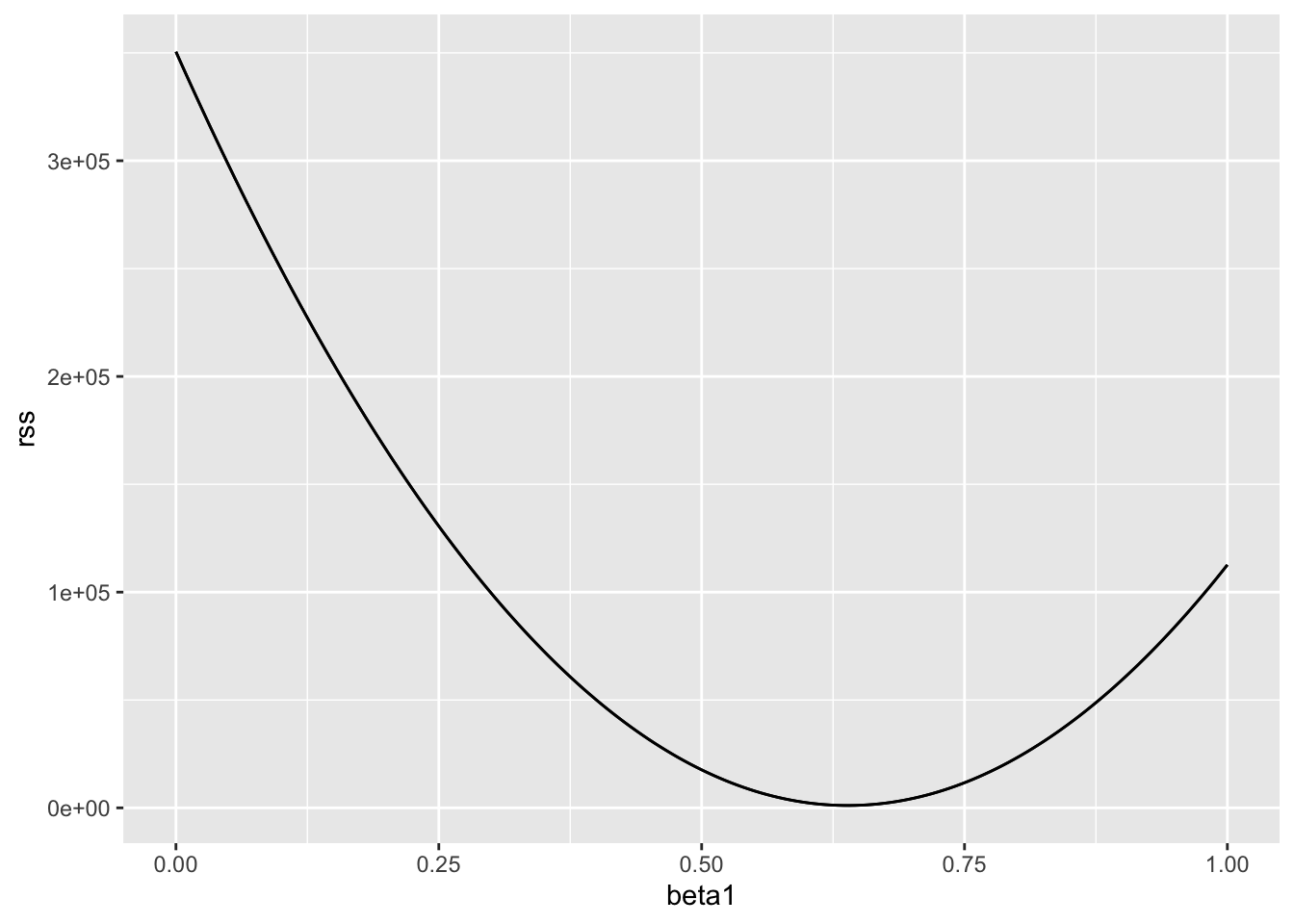

rss <- function(beta0, beta1, data){

resid <- galton_heights$son - (beta0 + beta1*galton_heights$father)

return(sum(resid^2))

}So for any pair of values, we get an RSS. Here is a plot of the RSS as a function of \(\beta_1\) when we keep the \(\beta_0\) fixed at 25.

beta1 = seq(0, 1, length = nrow(galton_heights))

results <- data.frame(beta1 = beta1,

rss = sapply(beta1, rss, beta0 = 25))

results |> ggplot(aes(beta1, rss)) + geom_line() +

geom_line(aes(beta1, rss))

We can see a clear minimum for \(\beta_1\) at around 0.65. However, this minimum for \(\beta_1\) is for when \(\beta_0 = 25\), a value we arbitrarily picked. We don’t know if (25, 0.65) is the pair that minimizes the equation across all possible pairs. We don’t need trial and error because we can use calculus.

20.11 The lm function

In R, we can obtain the least squares estimates using the lm function. To fit the model:

\[ Y_i = \beta_0 + \beta_1 x_i + \varepsilon_i \]

with \(Y_i\) the son’s height and \(x_i\) the father’s height, we can use this code to obtain the least squares estimates.

fit <- lm(son ~ father, data = galton_heights)

fit$coef(Intercept) father

37.287605 0.461392 The most common way we use

lmis by using the character~to letlmknow which is the variable we are predicting (left of~) and which we are using to predict (right of~).The intercept is added automatically to the model that will be fit. To fit a model with no intercept you need to use

-1in the rightside of the formula.The object

fitincludes more information about the fit. We can use the functionsummaryto extract more of this information (not shown):

summary(fit)

Call:

lm(formula = son ~ father, data = galton_heights)

Residuals:

Min 1Q Median 3Q Max

-9.3543 -1.5657 -0.0078 1.7263 9.4150

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 37.28761 4.98618 7.478 3.37e-12 ***

father 0.46139 0.07211 6.398 1.36e-09 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 2.45 on 177 degrees of freedom

Multiple R-squared: 0.1878, Adjusted R-squared: 0.1833

F-statistic: 40.94 on 1 and 177 DF, p-value: 1.36e-09- To understand some of the information included in this summary we need to remember that the LSE are random variables. Mathematical statistics gives us some ideas of the distribution of these random variables.

20.12 LSE are random variables

The LSE is derived from the data \(y_1,\dots,y_N\), which are a realization of random variables \(Y_1, \dots, Y_N\). This implies that our estimates are random variables. Here is a Monte Carlo simulation demonstrating it:

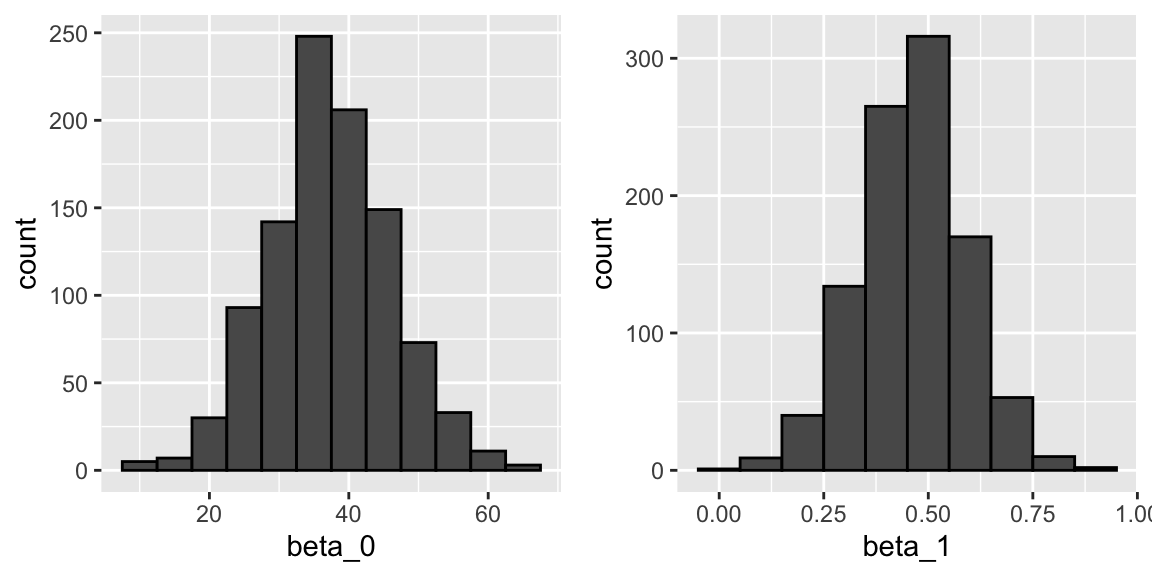

B <- 1000

N <- 50

lse <- replicate(B, {

sample_n(galton_heights, N, replace = TRUE) |>

lm(son ~ father, data = _) |>

coef()

})

lse <- data.frame(beta_0 = lse[1,], beta_1 = lse[2,]) We can see the variability of the estimates by plotting their distributions:

Attaching package: 'gridExtra'The following object is masked from 'package:dplyr':

combine

The reason these look normal is because the central limit theorem applies here as well.

The standard errors are a bit complicated to compute, but mathematical theory does allow us to compute them and they are included in the summary provided by the

lmfunction.Here it is for one of our simulated data sets:

sample_n(galton_heights, N, replace = TRUE) |>

lm(son ~ father, data = _) |>

summary() |>

coef() Estimate Std. Error t value Pr(>|t|)

(Intercept) 19.2791952 11.6564590 1.653950 0.1046637693

father 0.7198756 0.1693834 4.249977 0.0000979167/8 You can see that the standard errors estimates reported by the summary are close to the standard errors from the simulation:

lse |> summarize(se_0 = sd(beta_0), se_1 = sd(beta_1)) se_0 se_1

1 8.83591 0.1278812The summary function also reports t-statistics (t value) and p-values (Pr(>|t|)). The t-statistic is not actually based on the central limit theorem but rather on the assumption that the \(\varepsilon\)s follow a normal distribution. Under this assumption, mathematical theory tells us that the LSE divided by their standard error, \(\hat{\beta}_0 / \hat{\mbox{SE}}(\hat{\beta}_0 )\) and \(\hat{\beta}_1 / \hat{\mbox{SE}}(\hat{\beta}_1 )\), follow a t-distribution with \(N-p\) degrees of freedom, with \(p\) the number of parameters in our model. In the case of height \(p=2\), the two p-values are testing the null hypothesis that \(\beta_0 = 0\) and \(\beta_1=0\), respectively.

Although we do not show examples in this lecture, hypothesis testing with regression models is commonly used in epidemiology and economics to make statements such as “the effect of A on B was statistically significant after adjusting for X, Y, and Z”. However, several assumptions have to hold for these statements to be true.

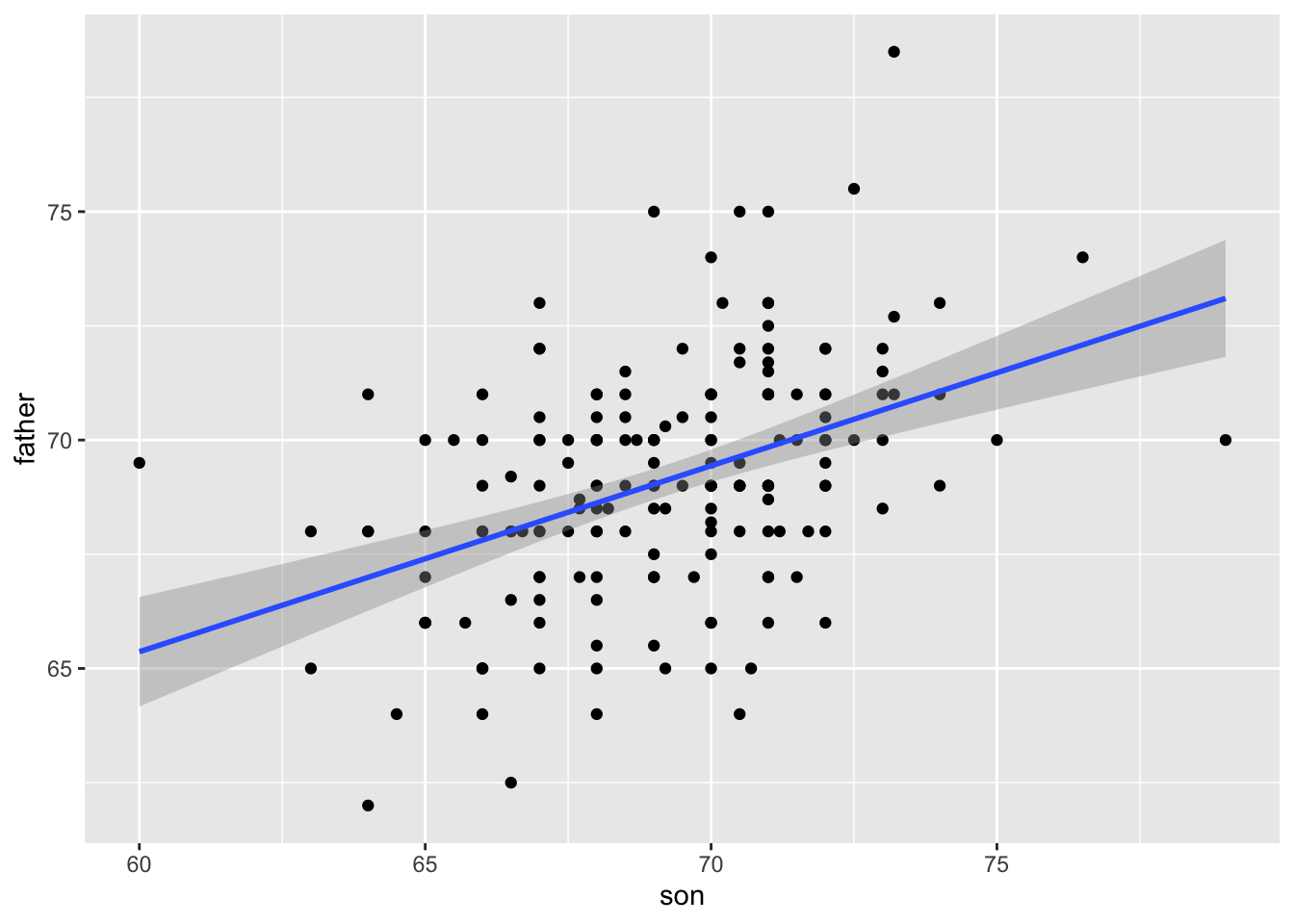

20.13 Predicted values are random variables

Once we fit our model, we can obtain prediction of \(Y\) by plugging in the estimates into the regression model. For example, if the father’s height is \(x\), then our prediction \(\hat{Y}\) for the son’s height will be:

\[\hat{Y} = \hat{\beta}_0 + \hat{\beta}_1 x\]

When we plot \(\hat{Y}\) versus \(x\), we see the regression line.

The ggplot2 layer geom_smooth(method = "lm") that we previously used plots \(\hat{Y}\) and surrounds it by confidence intervals:

galton_heights |> ggplot(aes(son, father)) +

geom_point() +

geom_smooth(method = "lm")`geom_smooth()` using formula = 'y ~ x'

- The R function

predicttakes anlmobject as input and returns the prediction. If requested, the standard errors and other information from which we can construct confidence intervals is provided:

fit <- galton_heights |> lm(son ~ father, data = _)

y_hat <- predict(fit, se.fit = TRUE)

names(y_hat)[1] "fit" "se.fit" "df" "residual.scale"20.14 Diagnostic plots

When the linear model is assumed rather than derived, all interpretations depend on the usefulness of the model. The lm function will fit the model and return summaries even when the model is wrong and unuseful.

- Visually inspecting residuals, defined as the difference between observed values and predicted values

\[ r = Y - \hat{Y} = Y - \left(\hat{\beta}_0 - \hat{\beta}_1 x_i\right), \]

and summaries of the residuals, is a powerful way to diagnose if the model is useful.

- Note that the residuals can be thought of estimates of the errors since

\[ \varepsilon = Y - \left(\beta_0 + \beta_1 x_i \right). \]

In fact, residuals are often denoted as \(\hat{\varepsilon}\). This motivates several diagnostic plots. Becasue we obervere, \(r\) but don’t observe \(\varepsilon\), we based the plots on the residuals.

Because the errors are assumed not to depend on the expected value of \(Y\), a plot of \(r\) versus the fitted values \(\hat{Y}\) should show no relationship.

In cases in which we assume the errors follow a normal distribtuion a qqplot of standardized \(r\) should fall on a line when plotted against theoretical quantiles.

Because we assume the standard deviation of the errors is constant, if we plot the absolute value of the residuals, it should appear constant.

We prefer plots rather than summaries based on, for example, correlation because, as noted in Section @ascombe, correlation is not always the best summary of association. The function plot applied to an lm object automatically plots these.

plot(fit, which = 1:3)This function can produce six different plots, and the argument which let’s you specify which you want to see. You can learn more by reading the plot.lm help file.

20.15 The regression fallacy

Wikipedia defines the sophomore slump as:

A sophomore slump or sophomore jinx or sophomore jitters refers to an instance in which a second, or sophomore, effort fails to live up to the standards of the first effort. It is commonly used to refer to the apathy of students (second year of high school, college or university), the performance of athletes (second season of play), singers/bands (second album), television shows (second seasons) and movies (sequels/prequels).

In Major League Baseball, the rookie of the year (ROY) award is given to the first-year player who is judged to have performed the best. The sophmore slump phrase is used to describe the observation that ROY award winners don’t do as well during their second year. For example, this Fox Sports article asks “Will MLB’s tremendous rookie class of 2015 suffer a sophomore slump?”.

Does the data confirm the existence of a sophomore slump? Let’s take a look. Examining the data for widely used measure of success, the batting average, we see that this observation holds true for the top performing ROYs:

| nameFirst | nameLast | rookie_year | rookie | sophomore |

|---|---|---|---|---|

| Willie | McCovey | 1959 | 0.3541667 | 0.2384615 |

| Ichiro | Suzuki | 2001 | 0.3497110 | 0.3214838 |

| Al | Bumbry | 1973 | 0.3370787 | 0.2333333 |

| Fred | Lynn | 1975 | 0.3314394 | 0.3136095 |

| Albert | Pujols | 2001 | 0.3288136 | 0.3135593 |

In fact, the proportion of players that have a lower batting average their sophomore year is 0.6981132.

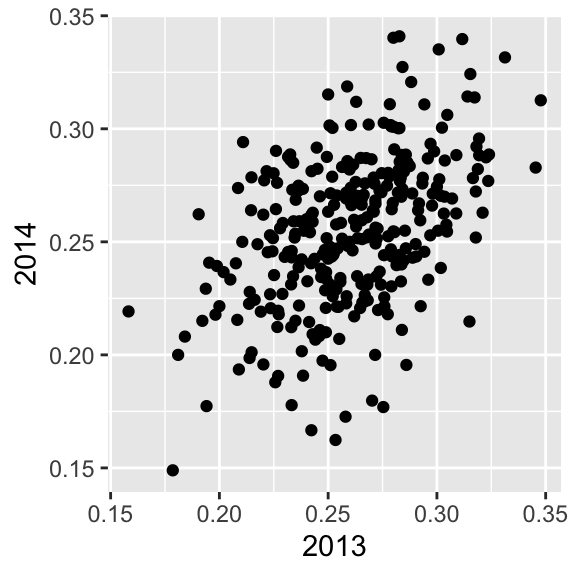

So is it “jitters” or “jinx”? To answer this question, let’s turn our attention to all players that played the 2013 and 2014 seasons and batted more than 130 times (minimum to win Rookie of the Year).

`summarise()` has grouped output by 'playerID'. You can override using the

`.groups` argument.The same pattern arises when we look at the top performers: batting averages go down for most of the top performers.

| nameFirst | nameLast | 2013 | 2014 |

|---|---|---|---|

| Miguel | Cabrera | 0.3477477 | 0.3126023 |

| Hanley | Ramirez | 0.3453947 | 0.2828508 |

| Michael | Cuddyer | 0.3312883 | 0.3315789 |

| Scooter | Gennett | 0.3239437 | 0.2886364 |

| Joe | Mauer | 0.3235955 | 0.2769231 |

But these are not rookies! Also, look at what happens to the worst performers of 2013:

| nameFirst | nameLast | 2013 | 2014 |

|---|---|---|---|

| Danny | Espinosa | 0.1582278 | 0.2192192 |

| Dan | Uggla | 0.1785714 | 0.1489362 |

| Jeff | Mathis | 0.1810345 | 0.2000000 |

| B. J. | Upton | 0.1841432 | 0.2080925 |

| Adam | Rosales | 0.1904762 | 0.2621951 |

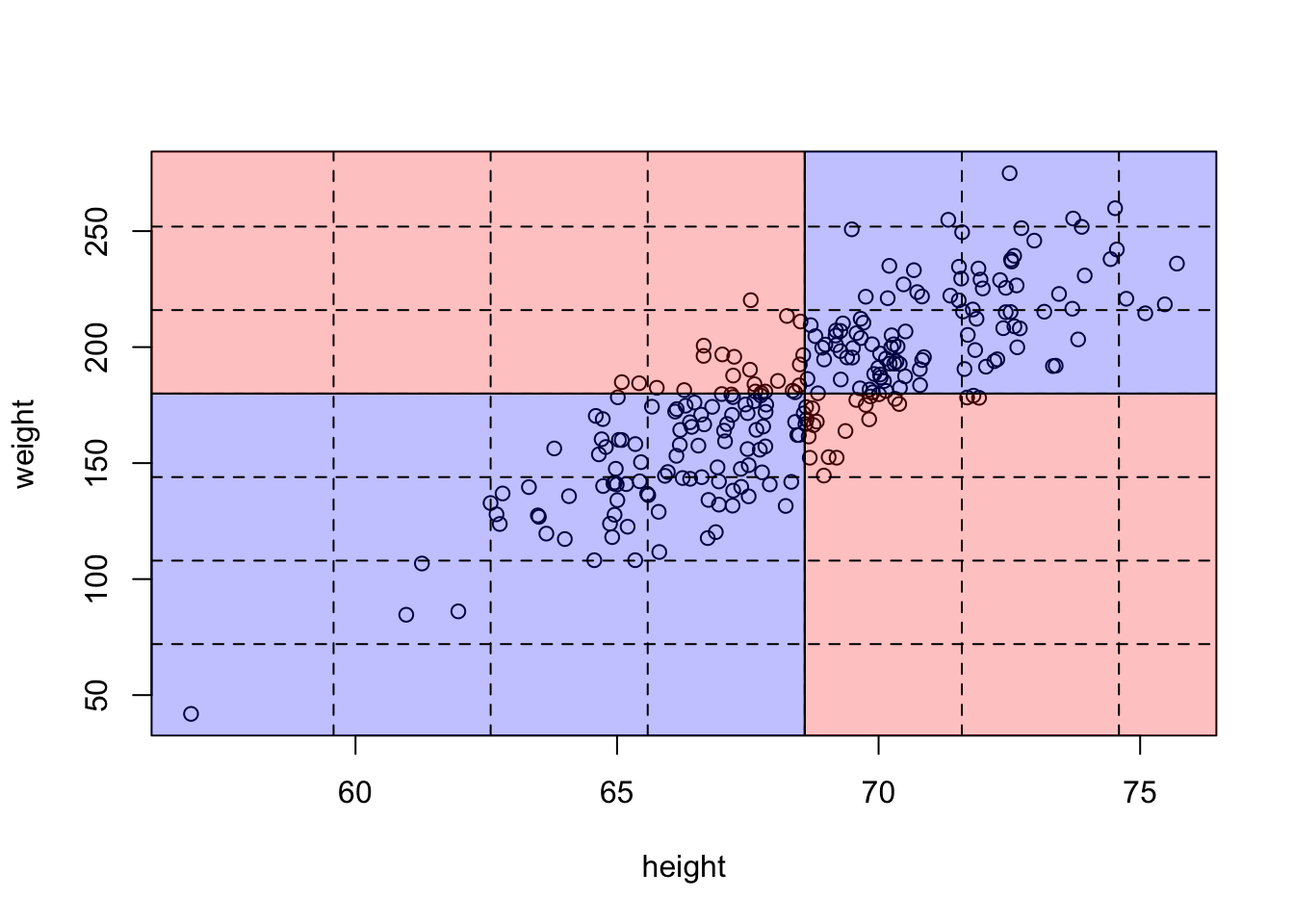

Their batting averages mostly go up! Is this some sort of reverse sophomore slump? It is not. There is no such thing as the sophomore slump. This is all explained with a simple statistical fact: the correlation for performance in two separate years is high, but not perfect:

The correlation is 0.460254 and the data look very much like a bivariate normal distribution, which means we predict a 2014 batting average \(Y\) for any given player that had a 2013 batting average \(X\) with:

\[ \frac{Y - .255}{.032} = 0.46 \left( \frac{X - .261}{.023}\right) \]

Because the correlation is not perfect, regression tells us that, on average, expect high performers from 2013 to do a bit worse in 2014. It’s not a jinx; it’s just due to chance. The ROY are selected from the top values of \(X\) so it is expected that \(Y\) will regress to the mean.

20.16 Exercises

1. Load the GaltonFamilies data from the HistData. The children in each family are listed by gender and then by height. Create a dataset called galton_heights by picking a male and female at random.

2. Make a scatterplot for heights between mothers and daughters, mothers and sons, fathers and daughters, and fathers and sons.

3. Compute the correlation in heights between mothers and daughters, mothers and sons, fathers and daughters, and fathers and sons.